Winter Break ❄️

During this last period and Christmas holidays, I took a little break. I actually did not find the motivation to write. But I thought it was a good idea to ask myself: 'What thing should I be working on during this time?' Holidays are a weird time because you're supposed to be with your family, loved ones and friends and not do anything else than rest. I simply get bored really easily.

Here’s my winter break accountability log for future-me to laugh at:

HackTheBox: I finally signed up for the HackTheBox Academy and completed the first four modules. I learned the basics of Incident Response (IR), SIEM Fundamentals with ELK stack, Windows Logging and Threat Hunting. More to that coming soon.

I watched the announcement and read the report of OpenAI’s new O3 model. It seems a really big step from O1 (I wrote a little article about it if you want to read).

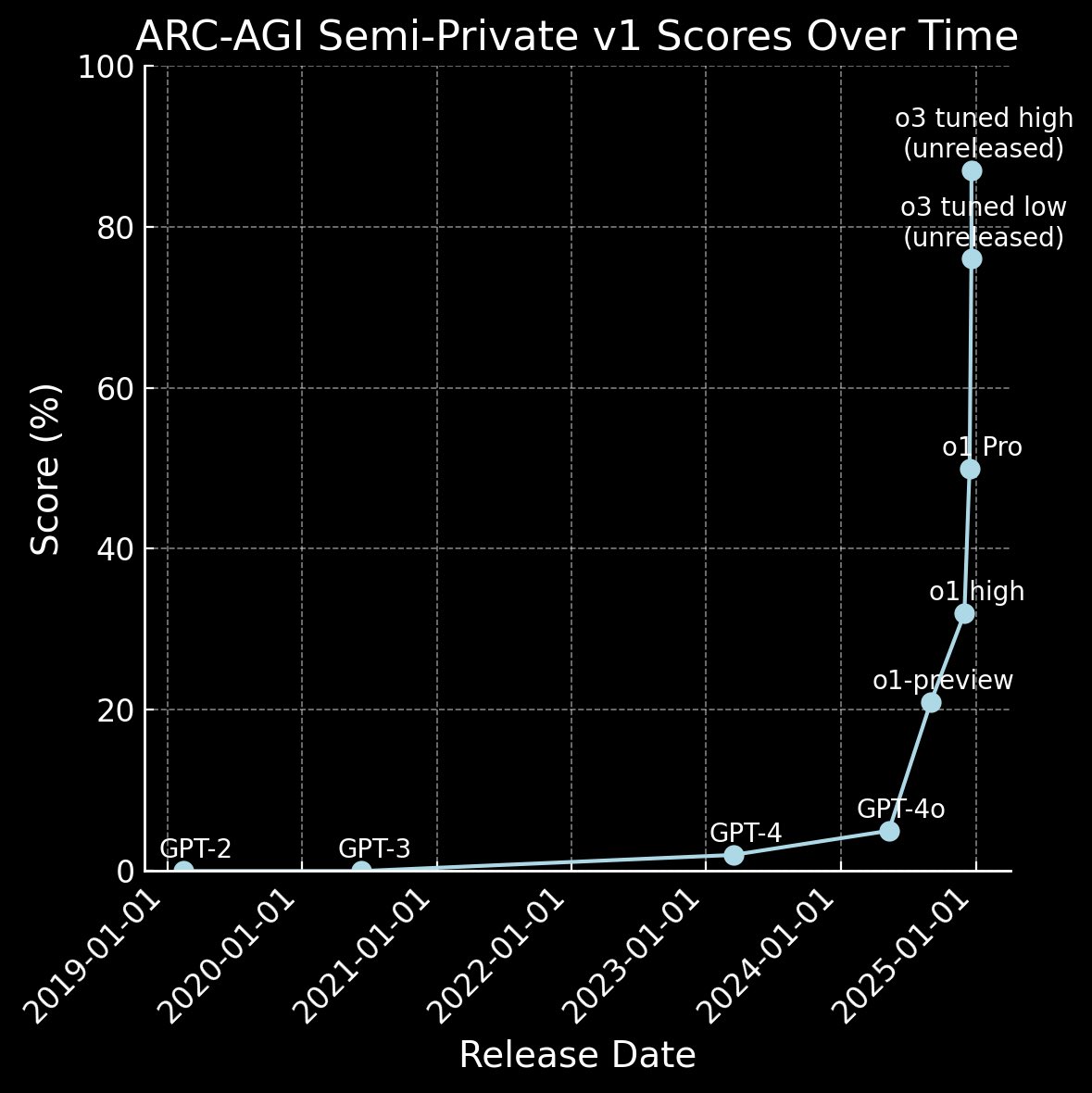

The 71.7% score of SWE-Bench, which are real GitHub issue to solve and the 25.2% score of Frontier-Math, which are really difficult math problems made me gasp. And of course I have to mention the 88% results of the ARC-AGI. And having watched Chollet’s interview weeks ago, it set the timeline pretty clear, how fast things in this field is moving is astonishing.

DeepSeek v3 technical report: I got really interested about this new Chinese model, the results they achieved with relatively modest resources seem pretty surprising. To be precise, 2048 NVIDIA H800 GPUs, which are a less powerful than the NVIDIA H100 Meta used for LLama training. Still, all that glitters is not gold. Both because I clearly did not understand all the technicalities of the report, and because it seems that, from various tests, it seems it heavily was post-trained using OpenAI GPT-4o.

OLMo 2 technical paper: I discovered OLMo when reading about the last paper I wrote about. OLMo 2 came out at the end of November but I only read about yesterday when the technical report came out (really great naming to be honest). I do believe open-sourcing this knowledge is crucial. And by open-sourcing I mean the weights of the model, the data used for the pre-training and the post-training and his evaluation.

For those times when reading felt too much like work, here's a short list of Youtube video I liked:

- Welch Labs mechanistic interpretability: this one really hits hard, Welch Labs has this magical ability to make complex topics, such as Mechanistic Interpretability, feel almost accessible. Highly suggested!

- Bob McGrew interview at Unsupervised Learning: nice interview for the former OpenAI Chief Research Officer.

- Dylan Patel lecture at Stanford: Really fascinating topics such as data center construction, tokenomics, and AI inference costs. Also, he has a blog which is incredible and rabbit-hole worthy called SemiAnalysis.

So, what did I get done these past two weeks? I studied cybersecurity fundamentals, fell down multiple AI rabbit holes reading technical papers (some more understandable than others), and watched smart people explain complex stuff on YouTube. Kinda how the rest of the year went. To another one like this!